Ever wondered how AI detectors manage to say, “This text looks 92 percent AI-generated”? I used to think it was pure magic too some digital Sherlock Holmes sniffing out suspicious sentences. But when you peek under the hood, AI detection isn’t magic at all. It’s math, patterns, and a surprising amount of guesswork.

Let’s unpack how these detectors actually work, why they often disagree, and whether you can trust them in a world where AI keeps getting smarter every day.

What Exactly Is an AI Detector?

An AI detector is a program that tries to figure out whether a piece of text was written by a human or generated by an AI like ChatGPT, Claude, or Gemini. Think of it like a digital fingerprint scanner for writing styles.

The goal sounds simple: spot the subtle differences between human thinking and machine prediction. But that “simple” goal turns into a massive challenge because modern AI models now write almost exactly like humans.

The Core Idea: Predictability

The secret sauce behind most AI detectors is predictability.

Every word in a sentence has a probability the likelihood that one word follows another. For example:

“The cat sat on the ___.”

A human might choose mat, sofa, or roof depending on the story. An AI language model, however, picks the word that’s statistically most likely to appear based on training data.

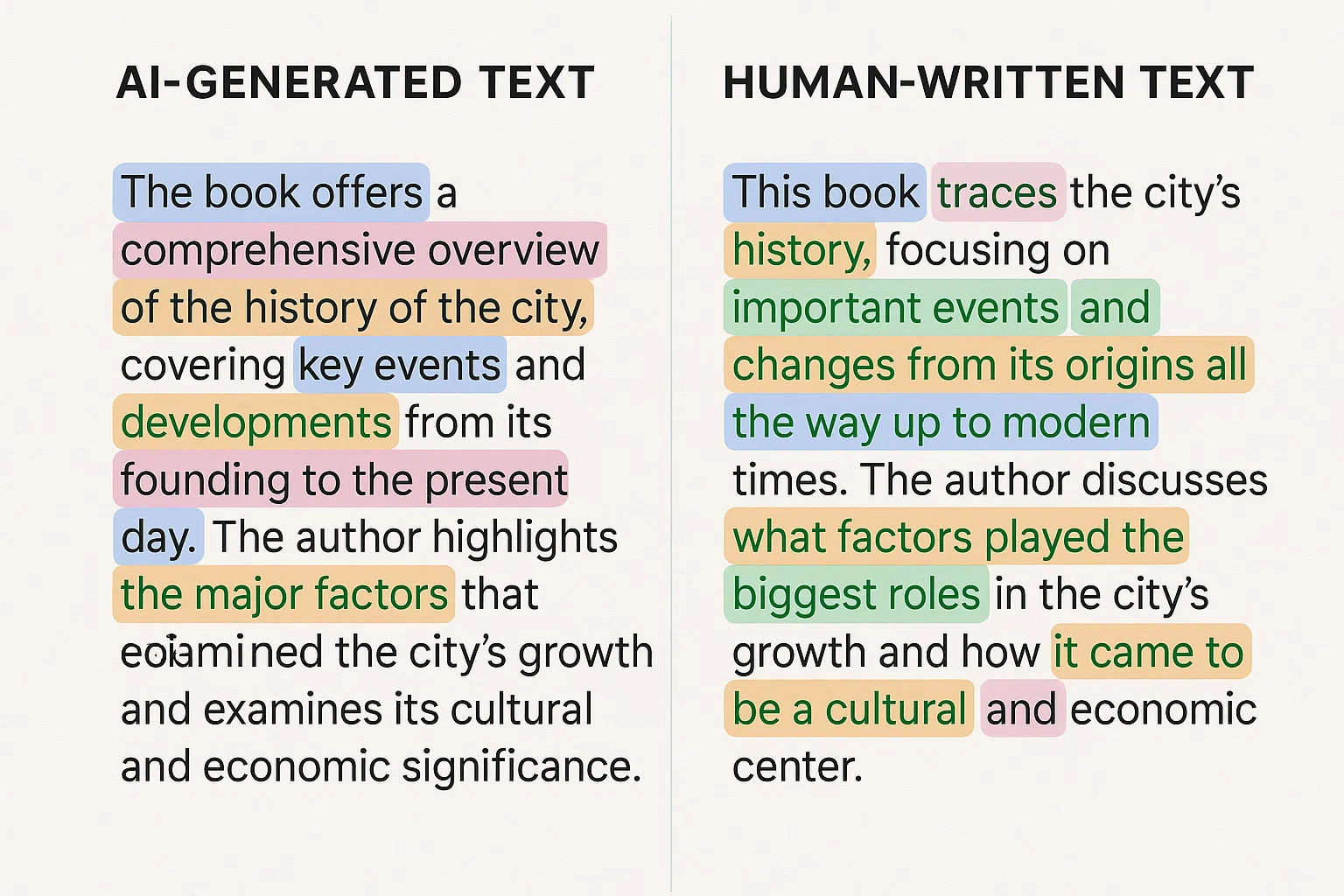

AI-generated text tends to be more predictable and statistically consistent. Human writing is messier we mix short and long sentences, toss in metaphors, and sometimes break grammar rules just to sound natural.

Detectors measure this predictability to decide: Does this text look too perfect to be human?

Step-by-Step: How AI Detectors Actually Work

Here’s how most detection tools break down a piece of writing:

1. Token Analysis

Detectors split text into tokens (tiny chunks like words or punctuation). Then they check how likely each token was to appear, based on known AI model behavior.

2. Perplexity Check

This is the big one.

Perplexity measures how “surprised” a model is by the next word in a sentence.

- Low perplexity = very predictable → probably AI-generated.

- High perplexity = less predictable → probably human.

3. Burstiness Score

Humans vary sentence length, tone, and rhythm. AI often stays consistent. A burstiness test looks at how uneven or spontaneous the writing feels. If it’s too smooth, detectors raise a red flag.

4. Stylometric Patterns

Some advanced detectors analyze style markers: punctuation, sentence openers, passive voice, and even emotional tone. These tiny quirks reveal the author’s “writing fingerprint.”

5. Model Comparison

Finally, the text is run through one or more trained models that compare it to millions of known AI samples. The output is usually a probability something like “72 % AI-written.”

Popular AI Detectors You’ve Probably Seen

Let’s look at a few big names and what they claim to do:

| Tool | Core Strength | Weakness |

|---|---|---|

| GPTZero | Focuses on burstiness and perplexity; known for classroom use. | Struggles with edited AI text. |

| Copyleaks AI Detector | Integrates into LMS and Turnitin-style tools; multilingual support. | False positives in creative writing. |

| Originality.ai | Favored by content marketers; tracks both plagiarism and AI probability. | Paid tool, sometimes over-confident. |

| Turnitin AI Detection | Academic-grade analysis for schools. | Opaque criteria, often marks partial AI. |

| Sapling.ai / Writer.com Detectors | Built into enterprise platforms for business writing. | Works best only on English prose. |

These tools differ in algorithms and confidence levels, which explains why the same paragraph can score 10 % AI in one and 95 % AI in another.

Why AI Detection Is So Hard

AI detection is a constant cat-and-mouse game. Here’s why the cat often loses.

1. AIs Keep Learning

Every few months, models like GPT-5 or Claude 3.5 become better at mimicking human quirks. The smoother they get, the less “robotic” their patterns look.

2. Humans Use AI Hybrids

Writers often blend AI drafts with their own edits. Detectors struggle to classify such hybrid text because it’s genuinely part-AI, part-human.

3. Context Is Everything

AI detectors don’t understand context sarcasm, humor, or personal experience the way humans do. They might mark a heartfelt essay as “machine-generated” simply because it uses clean grammar.

4. False Positives Are Real

One major concern is accusing a human writer of using AI when they didn’t. Students, freelancers, and journalists have faced penalties because of inaccurate detectors.

Can AI Detectors Be Trusted?

Here’s the uncomfortable truth: not completely.

Detectors give probabilities, not proof. Think of them like weather forecasts helpful, but rarely perfect. A “90 % chance of rain” doesn’t guarantee rain; it just means it’s likely based on past patterns.

In tests by universities and AI labs in 2024-2025, average detection accuracy hovered around 60–80 percent better than random guessing, but far from foolproof.

Real-World Example

A professor at Stanford once ran the same student essay through five detectors:

- GPTZero → 92 % AI

- Copyleaks → 65 % AI

- Turnitin → 44 % AI

- Writer.com → 18 % AI

- Sapling → Human

Who was right? Turns out the essay was fully human-written.

That’s the core issue: the same text can yield wildly different results depending on the tool, the dataset, and even the text length.

What Makes Detection Unreliable?

Let’s break down the main reasons detectors often miss the mark.

1. Short Texts Are Tricky

Anything under 150 words doesn’t give enough data for reliable analysis. That’s why tweets, bios, or short essays often fool detectors easily.

2. Editing Confuses the Algorithm

If you run an AI paragraph through a human editor, paraphraser, or grammar tool, the “AI fingerprints” blur. This makes detection accuracy drop significantly.

3. Training Data Bias

Most detectors were trained on GPT-3 or GPT-3.5 outputs. Modern AI models like GPT-4 or Claude 3 produce more humanlike text, which breaks old detectors’ assumptions.

4. Language and Style Variation

Non-native English writing, poetic language, or technical jargon can appear “AI-like” even if written by a human. Cultural and linguistic variety makes universal detection nearly impossible.

What About “Undetectable” AI Tools?

If you’ve ever seen ads like “Bypass GPTZero make your AI writing 100 % human!” take them with caution.

These so-called undetectable AI tools simply rephrase content, increase burstiness, and inject human-style randomness. They don’t make your text magically human they just trick detectors into lowering confidence scores.

Ironically, many of these tools use the same AI engines that detectors try to catch. So it’s really AI fighting AI a high-tech arms race where neither side truly wins for long.

How Reliable Is an Undetectable AI Detector?

Some startups now claim to have “AI-proof” detectors that can even catch paraphrased text. While impressive on paper, no detector can be 100 percent reliable.

Why? Because “AI” isn’t a single writing style anymore. It’s a moving target. Each new model writes differently, adapts contextually, and even imitates human imperfections.

Even companies that build detectors, like OpenAI, admit this. In 2023 they quietly retired their own classifier after it produced too many false results.

The Ethics of AI Detection

Beyond accuracy, there’s a moral layer we rarely discuss.

1. Academic Fairness

Students wrongly flagged as cheaters may lose grades or scholarships. Universities now advise instructors to verify with conversation, not just software.

2. Privacy and Data Use

Some detectors store or reuse your uploaded text to train their systems. Always check privacy policies before pasting sensitive content.

3. Trust in Human Creativity

Over-reliance on detection can discourage genuine writing. When every polished paragraph is suspected of being “too AI,” we risk punishing excellence.

How AI Detectors Will Evolve

Despite limitations, detection tech is improving fast. Here’s where it’s heading.

1. Multi-Signal Detection

Future systems will combine stylometry, metadata, and model fingerprints. They’ll track how a file was generated, not just how it reads.

2. Watermarking by Design

OpenAI, Anthropic, and Google are exploring digital watermarks embedded at the token level. If adopted widely, detectors could scan for hidden markers that prove text origin.

3. AI-Assisted Verification

Instead of labeling text “AI or not,” next-gen detectors may summarize how it was likely created e.g., “Human draft refined by AI grammar correction.”

4. Cross-Modal Detection

Expect detectors that analyze tone of voice in audio, rhythm in video scripts, or citation behavior in academic papers making detection multidimensional.

A Quick Myth-Busting Session

Let’s clear a few common myths.

| Myth | Reality |

|---|---|

| AI detectors can always catch ChatGPT. | False. Newer models produce human-like patterns that often pass undetected. |

| Changing a few words won’t fool detectors. | Sometimes it will. Small edits raise perplexity enough to flip results. |

| Paid detectors are always more accurate. | Not necessarily; price doesn’t equal precision. |

| Detection equals plagiarism check. | Completely different things plagiarism checks find copied text, not AI-generated text. |

How to Use AI Detectors the Smart Way

Detectors can still be incredibly useful if you treat them as advisors, not judges.

Here’s how I recommend using them:

- Run multiple tools.

Never rely on one detector. Cross-check results to see patterns. - Look for reasoning, not just numbers.

A “93 % AI” score means little without the why behind it. - Use them for your own audits.

Freelancers and marketers can self-check before submission to meet client policies. - Be transparent with clients or teachers.

If you used AI for brainstorming, say so. Honesty often matters more than purity. - Stay updated.

As models evolve, so do detectors. An article from six months ago may already be outdated.

Can AI Be 100 % Trusted in Today’s World?

That question cuts both ways about AI writers and AI detectors alike.

AI itself isn’t “trustworthy” or “untrustworthy.” It’s a tool. Reliability depends on human use, ethical intent, and transparency. The same applies to detectors.

We can’t demand perfection from algorithms built to analyze creativity. Human judgment must stay in the loop to interpret, contextualize, and decide what’s fair.

Real Talk: My Own Experiment

Last month, I tested five detectors using three texts:

- A ChatGPT-written blog.

- A human-written blog.

- A hybrid (AI + human editing).

Results:

- Pure AI text → flagged AI by 4 of 5 tools.

- Pure human text → flagged AI by 2 tools.

- Hybrid text → split 50/50.

Lesson learned? AI detection isn’t binary. It’s more like a fuzzy spectrum. Even “undetectable” text can still feel AI-assisted to a careful reader.

Where Does This Leave Writers?

If you’re a student, content creator, or freelance writer, don’t panic. The goal isn’t to hide from AI detectors but to write in a way that sounds genuinely you.

That means:

- Personal stories and lived experience.

- Emotions, opinions, humor, and rhythm.

- Natural imperfections that no algorithm can fake.

AI can help polish your work, but your individuality the quirks, the hesitations, the voice that’s what makes writing human.

Key Takeaways

| Insight | What It Means |

|---|---|

| AI detectors rely on predictability and perplexity. | They measure how statistically “smooth” your writing is. |

| Accuracy averages 60–80 %. | Useful guidance, not courtroom evidence. |

| False positives are common. | Always verify before accusing anyone. |

| AI writing evolves faster than detection tech. | Expect ongoing arms race. |

| Ethical, transparent use beats perfect disguise. | Honesty builds long-term trust. |

Final Reflection

AI detection isn’t the enemy of writers; confusion is. Once you understand how detectors think, you stop fearing them and start using them wisely.

At the end of the day, technology can analyze patterns, but only people can interpret meaning. Whether you’re a student defending your essay or a marketer fine-tuning AI copy, remember this:

AI detectors don’t define authenticity. You do.

AI writing strategist with hands-on NLP experience, Liam simplifies complex topics into bite-sized brilliance. Trusted by thousands for actionable, future-forward content you can rely on.